The AI-DLC. Why Your SDLC Is Holding Back Your Engineering Velocity

Your engineering organization is treating AI agents like a faster keyboard. That’s why you’re not seeing results.

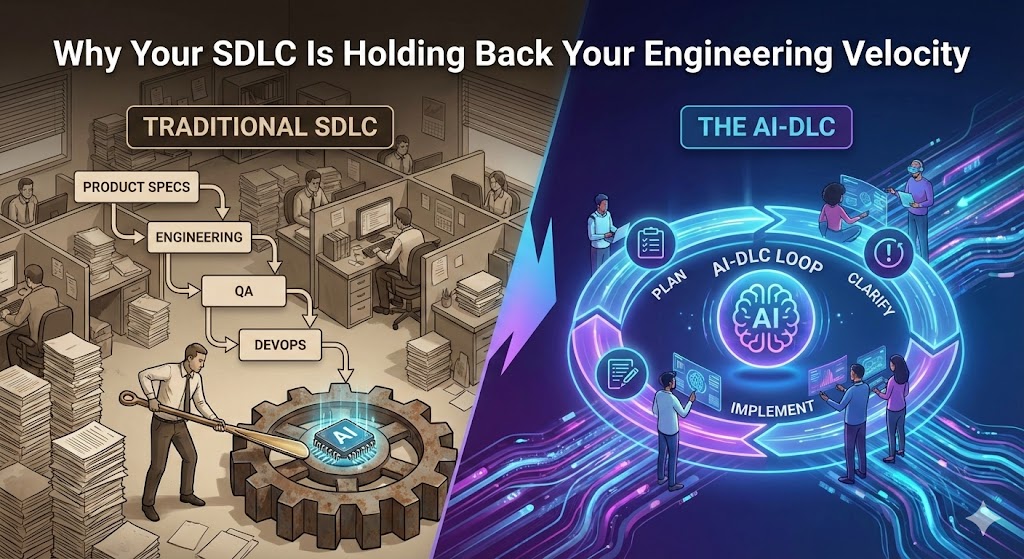

The SDLC wasn’t designed for AI. It was designed around human constraints: context-switching costs, communication overhead, specialization bottlenecks. Product Managers wrote specs. Engineers interpreted them. QA validated. DevOps deployed. Each handoff added days. Each role existed because humans can only hold so much context at once.

AI agents don’t have those constraints. But you’re still running them through a process built for people who do.

The Shoehorn Problem

Most organizations are bolting AI onto existing workflows and wondering why velocity hasn’t changed. Engineer opens agent, asks for code, drops it into the same sprint cycle, waits for the same review queue, deploys through the same pipeline.

You’ve automated the typing. You haven’t changed the system.

Meanwhile, vibe coders are shipping entire products in hours. I’m not here to defend vibe coding. Decisions made in isolation without full understanding are bad decisions, full stop. But we can’t ignore the signal: the speed of iteration has fundamentally changed. Our processes haven’t.

The AI-DLC Loop

If you’ve used Claude Code, Codex, or OpenCode, you’ve noticed something: you get dramatically better results when you start in plan mode. That’s not a feature quirk. That’s the foundation of a new development lifecycle.

The AI-DLC operates on a tight loop: Plan → Clarify → Implement.

AI creates a plan based on a PRD where business outcomes are defined and impact is known. This is the highest-leverage moment in the entire cycle. AI won’t push back on bad requirements, and you can burn through tokens fast building the wrong thing. The system then surfaces ambiguities that require human judgment. A collaborative team provides context, makes quick decisions in real time. Those decisions inform implementation. Loop restarts until complete.

Three phases emerge:

Ideation: AI transforms business intent into technical requirements. The PRD isn’t a document that gets thrown over a wall. It’s a living spec that the agent interrogates.

Build: AI implements against those requirements, but implementation isn’t a black box. The plan-clarify-implement loop runs continuously. Security, testing, edge cases all get surfaced through clarification, not bolted on after.

Deploy: Shipping, monitoring, iteration. The loop doesn’t stop at merge.

Why Cross-Functional Teams Matter More, Not Less

Here’s the part most people get wrong: they assume AI replaces collaboration. It intensifies it.

When engineers typed code, they had time to think between keystrokes. They could context-switch to Slack, chase down a PM, wait for a meeting. The pace allowed for loose coordination.

The AI-DLC doesn’t wait. Clarification requests come fast. Decisions need to happen in real time. The experts in product, design, security, and infrastructure need to be in the loop, watching their vision materialize and course-correcting immediately.

Teams that adapt their AI-DLC to their specific business context will accelerate. Teams that keep shoehorning agents into waterfall-with-sprints will keep wondering why AI hasn’t changed anything.

The human in the loop isn’t optional. It’s the entire point.

What This Means For You

Your engineering organization needs to answer three questions in the next 30 days:

Is your current process designed for AI or just tolerating it? Look at where AI agents sit in your workflow. Are they accelerating the system or just executing faster inside a slow system? If your sprint cycles, review queues, and deployment pipelines haven’t changed, you’re shoehorning.

Who owns the Plan-Clarify-Implement loop? The AI-DLC requires real-time decision-making from people with context. If your product, engineering, and domain experts aren’t collaborating synchronously when the agent surfaces ambiguity, you’re building on assumptions. Someone needs to own that collaboration model.

What does your PRD quality look like under pressure? In traditional development, vague requirements get clarified over days through conversation. In the AI-DLC, vague requirements get filled with assumptions in minutes. Your PRD is now the spec for an automated system. Treat it like one.

The companies winning with AI aren’t the ones with the best models or the most tokens. They’re the ones who rebuilt their development lifecycle around what AI actually needs: tight feedback loops, real-time collaboration, and humans in the loop making decisions that matter.

The SDLC served us well. It’s time to let it go.