Why Your Engineering Team is Wasting 80% of AI Agent Potential

Your engineers are using AI agents. They’re burning through tokens. And they’re barely moving faster than they were six months ago.

The problem isn’t the tools. It’s that you’re treating intelligent agents like fancy autocomplete.

Most engineering organizations approach agent-driven development like this: developer opens a chat window, asks for code, copies the response, realizes it’s wrong, asks again, loses context, starts over. Rinse and repeat fifteen times per feature. This is $200/hour engineering talent doing the work of a project manager for a probabilistic system.

The companies that figure out agent architecture in 2026 will 10x their engineering velocity. Everyone else will just 10x their bills.

The Chat Trap: Why Smart Engineers Are Working Harder, Not Smarter

Let’s be clear about what most teams are doing with AI agents today:

Traditional chat-based coding looks like productive work. Developer asks agent to create an endpoint. Agent responds. Developer reviews, finds issues, asks for auth. Agent responds. Developer spots missing validation, asks again. Agent responds. Developer needs tests, asks again. Developer becomes the integration layer, managing context, identifying gaps, orchestrating work across 15+ interactions.

This is not automation. This is outsourcing your engineering judgment to a system that forgets context every few minutes.

The fundamental problem: chat interfaces force serialized work with manual context management. Every session starts from zero. Agents have no knowledge of your codebase structure, architectural patterns, or constraints beyond what you explicitly provide. Context gets lost between messages. You’re not collaborating with an intelligent system—you’re supervising a very fast, very forgetful junior developer.

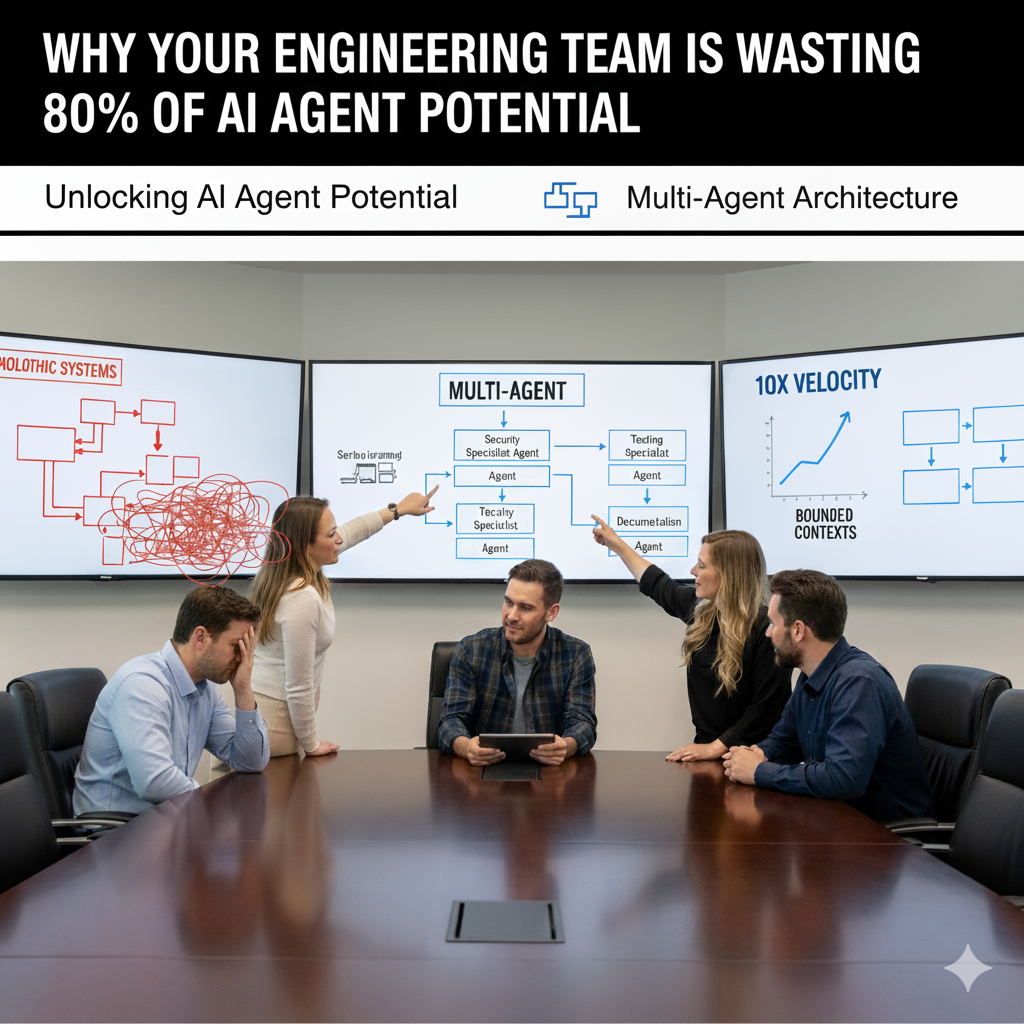

What Proper Agent Architecture Actually Looks Like

The organizations getting real velocity gains aren’t using better models. They’re using better architecture.

Single configured agent with persistent context changes the equation. Instead of fifteen interactions, you get three. The agent maintains persistent repo context—codebase structure, architectural patterns, testing conventions. You provide a requirement. Agent scaffolds the complete implementation using established patterns. You review integrated output. Agent fixes edge cases. Done.

The difference: agents have the context to make architectural decisions, not just generate isolated code snippets.

Multi-agent orchestration is where velocity compounds. Your job becomes defining requirements once. An orchestrator distributes work to specialized agents with bounded contexts:

API Specialist Agent receives the requirement, understands REST patterns and existing endpoints, implements the endpoint with proper error handling, produces an API contract and passes schema plus auth requirements to the security agent.

Security Specialist Agent receives the schema and auth requirements, applies auth patterns and security policies from the codebase, generates auth middleware and input validation, passes validation rules to testing.

Testing Specialist Agent receives the implementation and validation rules, knows your test patterns and coverage requirements, generates unit tests, integration tests, and edge cases, passes coverage report to documentation.

Documentation Agent receives all implementations and existing docs structure, generates API documentation and OpenAPI spec updates.

Your involvement: one comprehensive PRD input, one review of the complete integrated feature.

This Is Just Good Software Engineering

Here’s what multi-agent architecture actually is: separation of concerns applied to AI systems.

We’ve known for decades that monolithic systems with unbounded context fail. You don’t build a single class that handles database access, business logic, UI rendering, and deployment. You create focused components with clear interfaces.

Small, focused contexts instead of one agent trying to do everything. Clear interfaces between components through explicit handoff protocols. Specialized responsibilities where each agent has a single job. Explicit contracts defining what each agent produces and consumes.

The question isn’t whether multi-agent is better than monolithic agents. The question is why you’d build your AI systems using architectural principles you abandoned in your codebase fifteen years ago.

Your PRD is Now a Technical Specification

If agents are specialized components with bounded contexts, then PRDs become the specification for your agent system.

In traditional development, vague PRDs get clarified during planning. Engineers ask questions. Ambiguity gets resolved through conversation. Humans fill in gaps with business context and common sense.

In agent-driven development, vague PRDs get filled in with assumptions. Each agent makes different assumptions. Assumptions compound across handoffs. What you get is coherent, well-formatted code that implements the wrong solution.

Your new bottleneck is PRD quality, not coding speed.

A bad spec in a traditional workflow means a few wasted days. A bad spec in a multi-agent workflow means four agents built perfectly integrated components that solve the wrong problem. Debugging multi-agent misalignment is significantly harder than just writing the code yourself.

The Complacency Trap: Fast Output Looks Like Good Output

Here’s the uncomfortable truth: agents don’t make you faster if you’re checking out.

They amplify your judgment—good or bad. And polished-looking code is easier to rubber-stamp than obviously broken code.

Engineering review complacency shows up like this: tests pass, code follows established patterns, no obvious bugs. What gets missed: subtle performance issues, incorrect business logic implementation, security edge cases, technical debt that compounds across ten agent-generated features.

Product review complacency looks similar: meets acceptance criteria, UI looks correct. What gets missed: wrong user flow for edge cases, features that technically work but don’t solve actual problems, inconsistencies with product strategy because PRD was ambiguous.

The risk isn’t that agents produce bad code. The risk is that agents produce coherent, well-structured code that passes lazy review but fails in production.

Agents let you move 10x faster. If you’re not thinking 10x harder about what you’re building and how, you’re just creating problems faster.

The human judgment layer is not a formality. It’s the only thing preventing high-velocity failure. Speed without judgment equals expensive mistakes at scale.

The Tool Decision You’re Overthinking

Here’s what every CTO asks first: “Which tool should we standardize on?”

Wrong question.

The tool landscape splits cleanly into model-specific (OpenAI Codex, Claude Code, Gemini CLI) and model-agnostic (Cursor, GitHub Copilot, Windsurf). Model-specific gives you peak performance today with complete vendor lock-in. Model-agnostic gives you flexibility as models evolve with slightly worse performance now.

Neither choice matters if your setup is broken.

The real decisions that determine success or failure:

You’re making a vendor bet either way. Model-specific tools lock you into one AI provider. Model-agnostic platforms lock you into one orchestration layer. Pick the risk that fits your business context—vendor performance or platform flexibility—then move on. Your engineers wasting weeks evaluating tools is more expensive than picking the wrong one.

Standardization kills velocity until you need it. Let engineers use whatever works while you’re exploring. The moment you start building multi-agent workflows, you need standardized handoff protocols or agents can’t talk to each other. Standardize the orchestration layer and handoff formats. Let individual teams pick tools that fit their workflow.

The pattern: architecture decisions matter, tool decisions don’t.

Why Most Teams Will Fail at This

The failure mode is predictable: you’ll move too slow or too fast, both ways lose.

Moving too slow looks like endless tool evaluations, pilot programs that never graduate to production, waiting for the “right” moment to start. Your competition isn’t waiting. Every month you delay is a month your team isn’t learning, your engineers aren’t building muscle memory, your organization isn’t discovering what works. The learning curve is real and steep. Six months from now, you’ll wish you started today.

Moving too fast looks like mandating tools before engineers understand the workflow, standardizing patterns before you know what works, building complex multi-agent systems before you’ve shipped a single-agent feature. You get tool sprawl with no shared learnings, frustrated engineers who feel micromanaged, and expensive agent orchestration that doesn’t deliver value.

The companies that win do something different: they move fast on learning, slow on standardization.

Start with two or three engineers on real features using whatever tools they want. Not toy problems or side projects—actual work. Give them two weeks, then force them to document what worked and what failed. Not “here’s what we learned” bullshit, but specific examples: “Agent X nailed this pattern but completely failed at that one.”

Take those learnings and expand to a team. Build your first agent-ready PRD template. Ship one feature end-to-end with a configured agent. Document the handoff points where context got lost or assumptions diverged.

Only then standardize your orchestration layer. Define how agents communicate, what context gets preserved, how quality gates work. Measure actual success rates—not vibes, not “feels faster,” but data on agent-generated code that ships without changes.

This takes quarters, not weeks. If you’re not willing to invest that time, you’re not serious about agent-driven development.

The Competitive Reality You Can’t Ignore

Let’s be direct: this isn’t optional anymore.

Your competitors are using agents right now. Some are doing it well, most are doing it badly, but they’re all learning faster than you if you’re standing still. Your best engineers are using these tools whether you’ve approved them or not. The difference is whether they’re learning in a structured way that compounds across your organization or wasting time in isolation.

Talent expectations have shifted. Engineers who’ve experienced 10x productivity gains with proper agent architecture won’t go back to companies that treat AI like a novelty. You’re not just competing for customers anymore—you’re competing for engineers who can actually use these systems effectively.

The learning curve is the real cost. Six months from today, you’ll either have an engineering organization that knows how to ship features with multi-agent workflows, or you’ll be starting from zero while everyone else is optimizing. The sooner you start, the sooner you learn, the faster you can scale.

Start messy, but start deliberate. Document everything. Share learnings across teams. Run working sessions where engineers show what works and what fails. Build shared context about what good agent architecture looks like in your specific codebase.

What This Means for You

Your engineering organization needs to answer three questions in the next 30 days:

Where are you actually starting from? Not where you think you should be, but where you are. Some engineers experimenting? Teams using agents but no shared practices? Multiple agent workflows with no standardization? Be honest about current state—it determines what you do next.

What’s your architectural stance? How will you move from chat-based coding to configured agents to multi-agent orchestration? What are your non-negotiables for context management, handoff protocols, and quality gates? Architecture before tools, always.

How will you prevent velocity from becoming chaos? What does rigorous review look like when code appears polished? How do you catch bad assumptions before they compound across agent handoffs? Who owns PRD quality and agent system architecture?

The companies winning with agents aren’t using the most sophisticated models or the newest tools. They’re applying decades-old software engineering principles—separation of concerns, clear interfaces, bounded contexts—to AI orchestration.

The question isn’t whether agent-driven development will transform your engineering organization. It will. The question is whether you’ll use it to 10x your velocity or just automate technical debt at scale.

Your architecture will make the difference.